- Details

-

Published: Wednesday, June 19 2019 14:08

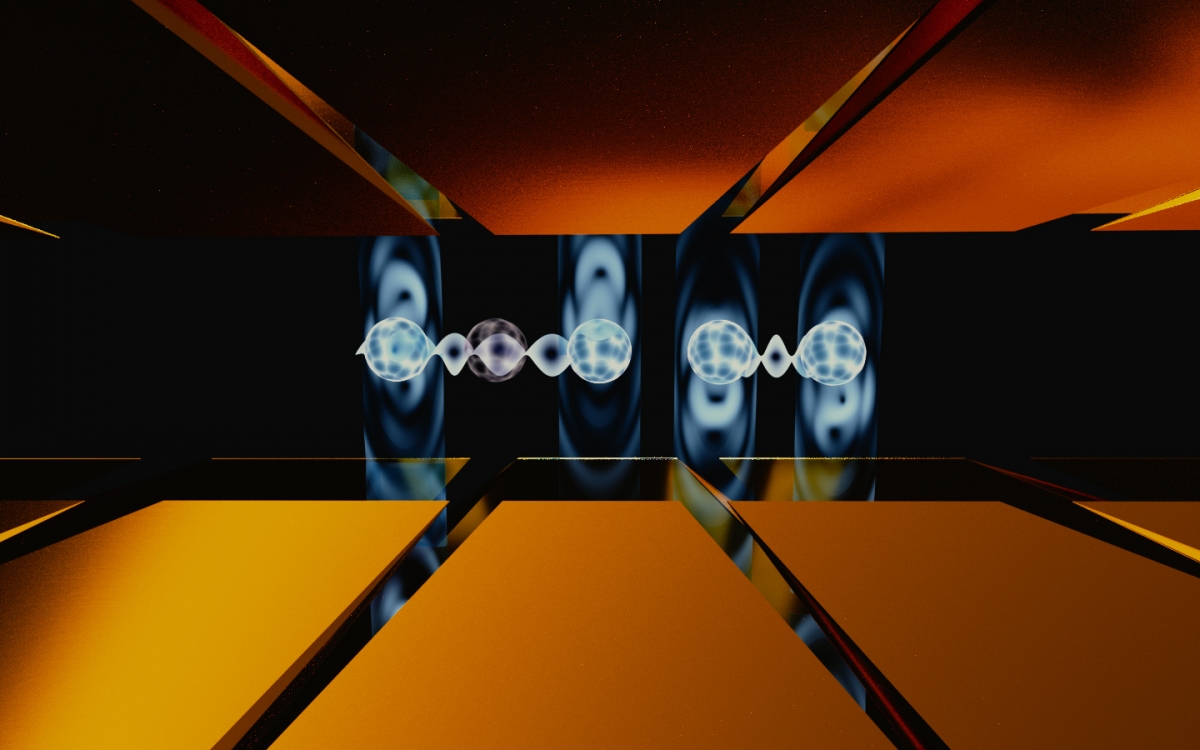

In Klein tunneling, an electron can transit perfectly through a barrier. In a new experiment, researchers observed the Klein tunneling of electrons into a special kind of superconductor. (Credit: E. Edwards/JQI)

In Klein tunneling, an electron can transit perfectly through a barrier. In a new experiment, researchers observed the Klein tunneling of electrons into a special kind of superconductor. (Credit: E. Edwards/JQI)

A junction between an ordinary metal and a special kind of superconductor has provided a robust platform to observe Klein tunneling.

Researchers at the University of Maryland have captured the most direct evidence to date of a quantum quirk that allows particles to tunnel through a barrier like it’s not even there. The result, featured on the cover of the June 20, 2019 issue of the journal Nature, may enable engineers to design more uniform components for future quantum computers, quantum sensors and other devices.

The new experiment is an observation of Klein tunneling, a special case of a more ordinary quantum phenomenon. In the quantum world, tunneling allows particles like electrons to pass through a barrier even if they don’t have enough energy to actually climb over it. A taller barrier usually makes this harder and lets fewer particles through.

Klein tunneling occurs when the barrier becomes completely transparent, opening up a portal that particles can traverse regardless of the barrier’s height. Scientists and engineers from UMD’s Center for Nanophysics and Advanced Materials (CNAM), the Joint Quantum Institute (JQI) and the Condensed Matter Theory Center (CMTC), with appointments in UMD’s Department of Materials Science and Engineering and Department of Physics, have made the most compelling measurements yet of the effect.

“Klein tunneling was originally a relativistic effect, first predicted almost a hundred years ago,” says Ichiro Takeuchi, a professor of materials science and engineering (MSE) at UMD and the senior author of the new study. “Until recently, though, you could not observe it.”

It was nearly impossible to collect evidence for Klein tunneling where it was first predicted—the world of high-energy quantum particles moving close to the speed of light. But in the past several decades, scientists have discovered that some of the rules governing fast-moving quantum particles also apply to the comparatively sluggish particles traveling near the surface of some unusual materials.

One such material—which researchers used in the new study—is samarium hexaboride (SmB6), a substance that becomes a topological insulator at low temperatures. In a normal insulator like wood, rubber or air, electrons are trapped, unable to move even when voltage is applied. Thus, unlike their free-roaming comrades in a metal wire, electrons in an insulator can’t conduct a current.

Topological insulators such as SmB6 behave like hybrid materials. At low enough temperatures, the interior of SmB6 is an insulator, but the surface is metallic and allows electrons some freedom to move around. Additionally, the direction that the electrons move becomes locked to an intrinsic quantum property called spin that can be oriented up or down. Electrons moving to the right will always have their spin pointing up, for example, and electrons moving left will have their spin pointing down.

The metallic surface of SmB6 would not have been enough to spot Klein tunneling, though. It turned out that Takeuchi and colleagues needed to transform the surface of SmB6 into a superconductor—a material that can conduct electrical current without any resistance.

To turn SmB6 into a superconductor, they put a thin film of it atop a layer of yttrium hexaboride (YB6). When the whole assembly was cooled to just a few degrees above absolute zero, the YB6 became a superconductor and, due to its proximity, the metallic surface of SmB6 became a superconductor, too.

It was a “piece of serendipity” that SmB6 and its yttrium-swapped relative shared the same crystal structure, says Johnpierre Paglione, a professor of physics at UMD, the director of CNAM and a co-author of the research paper. “However, the multidisciplinary team we have was one of the keys to this success. Having experts on topological physics, thin-film synthesis, spectroscopy and theoretical understanding really got us to this point,” Paglione adds.

The combination proved the right mix to observe Klein tunneling. By bringing a tiny metal tip into contact with the top of the SmB6, the team measured the transport of electrons from the tip into the superconductor. They observed a perfectly doubled conductance—a measure of how the current through a material changes as the voltage across it is varied.

“When we first observed the doubling, I didn’t believe it,” Takeuchi says. “After all, it is an unusual observation, so I asked my postdoc Seunghun Lee and research scientist Xiaohang Zhang to go back and do the experiment again.”

When Takeuchi and his experimental colleagues convinced themselves that the measurements were accurate, they didn’t initially understand the source of the doubled conductance. So they started searching for an explanation. UMD’s Victor Galitski, a JQI Fellow, a professor of physics and a member of CMTC, suggested that Klein tunneling might be involved.

“At first, it was just a hunch,” Galitski says. “But over time we grew more convinced that the Klein scenario may actually be the underlying cause of the observations.”

Valentin Stanev, an associate research scientist in MSE and a research scientist at JQI, took Galitski’s hunch and worked out a careful theory of how Klein tunneling could emerge in the SmB6 system—ultimately making predictions that matched the experimental data well.

The theory suggested that Klein tunneling manifests itself in this system as a perfect form of Andreev reflection, an effect present at every boundary between a metal and a superconductor. Andreev reflection can occur whenever an electron from the metal hops onto a superconductor. Inside the superconductor, electrons are forced to live in pairs, so when an electron hops on, it picks up a buddy.

In order to balance the electric charge before and after the hop, a particle with the opposite charge—which scientists call a hole—must reflect back into the metal. This is the hallmark of Andreev reflection: an electron goes in, a hole comes back out. And since a hole moving in one direction carries the same current as an electron moving in the opposite direction, this whole process doubles the overall conductance—the signature of Klein tunneling through a junction of a metal and a topological superconductor.

In conventional junctions between a metal and a superconductor, there are always some electrons that don’t make the hop. They scatter off the boundary, reducing the amount of Andreev reflection and preventing an exact doubling of the conductance.

But because the electrons in the surface of SmB6 have their direction of motion tied to their spin, electrons near the boundary can’t bounce back—meaning that they will always transit straight into the superconductor.

“Klein tunneling had been seen in graphene as well,” Takeuchi says. “But here, because it’s a superconductor, I would say the effect is more spectacular. You get this exact doubling and a complete cancellation of the scattering, and there is no analog of that in the graphene experiment.”

Junctions between superconductors and other materials are ingredients in some proposed quantum computer architectures, as well as in precision sensing devices. The bane of these components has always been that each junction is slightly different, Takeuchi says, requiring endless tuning and calibration to reach the best performance. But with Klein tunneling in SmB6, researchers might finally have an antidote to that irregularity.

“In electronics, device-to-device spread is the number one enemy,” Takeuchi says. “Here is a phenomenon that gets rid of the variability.”

Story by Chris Cesare

In addition to Takeuchi, Paglione, Lee, Zhang, Galitski and Stanev, co-authors of the research paper include Drew Stasak, a former research assistant in MSE; Jack Flowers, a former graduate student in MSE; Joshua S. Higgins, a research scientist in CNAM and the Department of Physics; Sheng Dai, a research fellow in the department of chemical engineering and materials science at the University of California, Irvine (UCI); Thomas Blum, a graduate student in physics and astronomy at UCI; Xiaoqing Pan, a professor of chemical engineering and materials science and of physics and astronomy at UCI; Victor M. Yakovenko, a JQI Fellow, professor of physics at UMD and a member of CMTC; and Richard L. Greene, a professor of physics at UMD and a member of CNAM.